Introduction:

Cross-NUMA tests as part of OPNFV Plugfest (Gambia) - January 2019..............

- VSPERF-Scenarios: P2P and PVP.

- Workloads: vSwitchd, PMDs and VNF.

- VNF: L2 Forwarding

- vswitch: OVS and VPP.

Testcases Run:

Framesizes: 64, 128, 256, 512, 1024, 1280, 1518

- RFC2544 Throughput Test - NDR.

- Continuous traffic Test - 100%

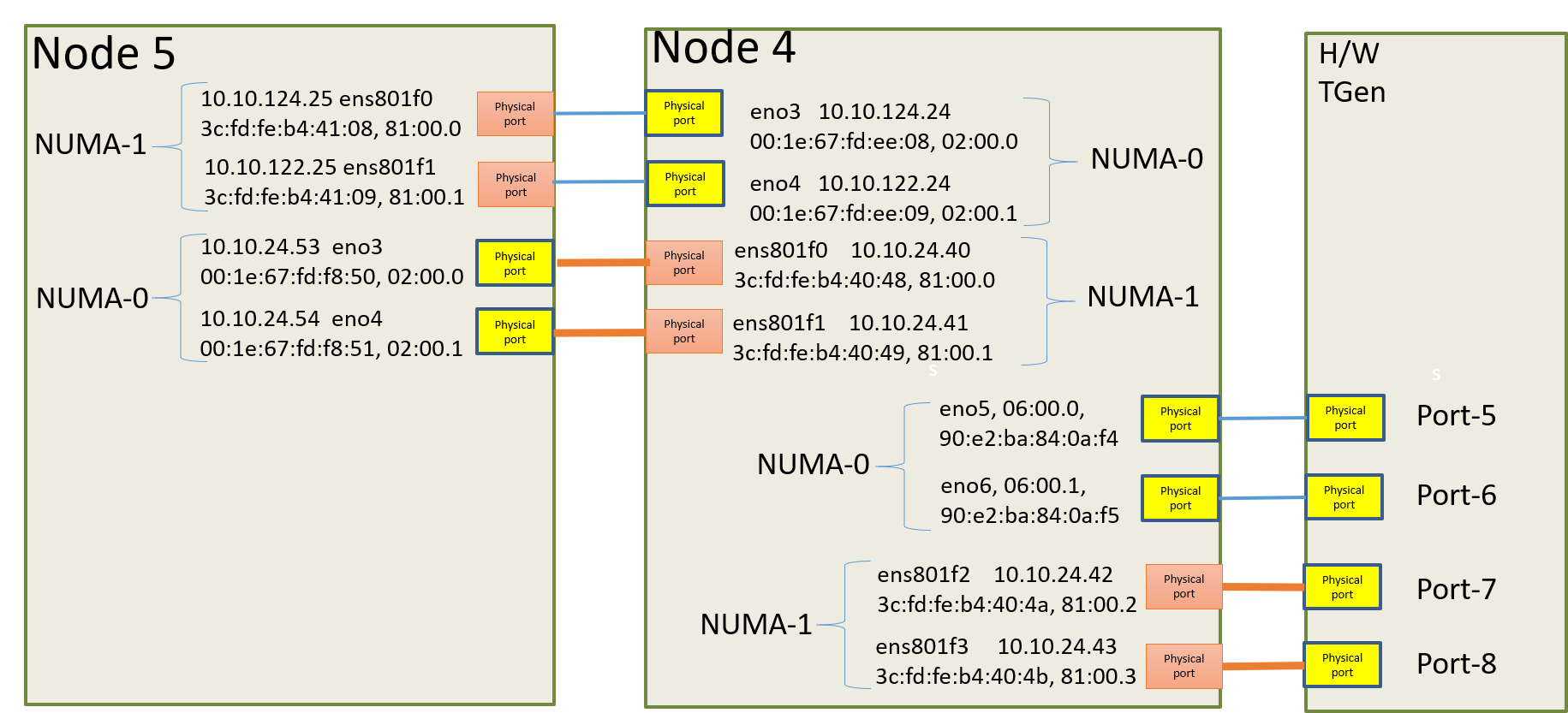

Testbed:

Node-4 (DUT), Node-5 (Software Traffic Generators) and H/W Traffic Generator.

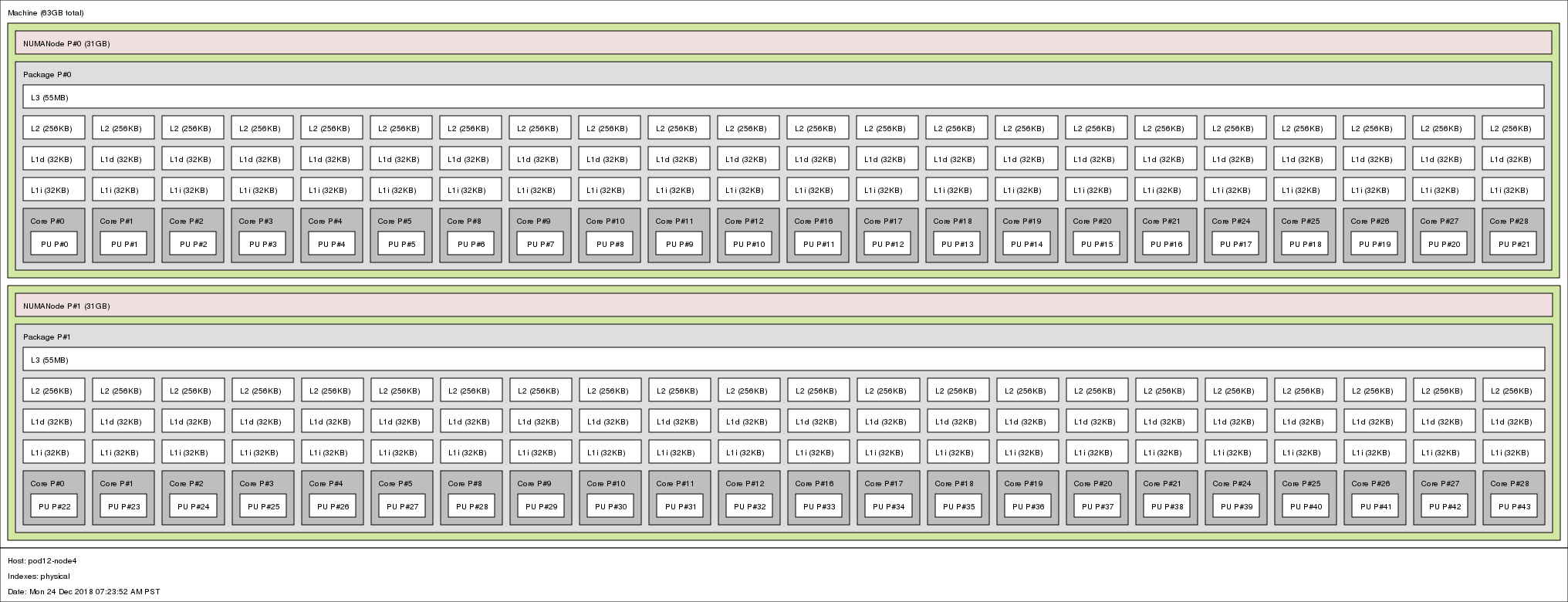

CPU Topology on DUT

P2P Scenarios

Summary of P2P Scenarios:

| Scenario | Possible Core-allocations: | DUT Ports, TGen (Hardware) Ports |

|---|---|---|

| 1 | PMDs: 4, 5 (0x30) | DUT: eno5, eno6 TGEN: 5, 6 |

| 2 | PMDs: 22, 23 (0xC00000) | DUT: eno5, eno6 TGEN: 5, 6 |

| 3 | PMDs: 4, 22 (0x400010) | DUT: eno5, eno6 TGEN: 5, 6 |

| 4 | PMDs: 4, 5 (0x30) | DUT: eno5, ens801f2 TGEN: 5, 7 |

| 5 | PMDs: 22, 23 (0xC00000) | DUT: eno5, ens801f2 TGEN: 5, 7 |

| 6 | PMDs: 4, 22 (0x400010) | DUT: eno5, ens801f2 TGEN: 5, 7 |

| 7 | PMDs: 4, 5 (0x30) | DUT: ens801f2, ens802f3 TGEN: 7, 8 |

| 8 | PMDs: 22, 23 (0xC00000) | DUT: ens801f2, ens802f3 TGEN: 7, 8 |

| 9 | PMDs: 4, 22 (0x400010) | DUT: ens801f2, ens802f3 TGEN: 7, 8 |

PVP Scenarios

Summary of PVP Scenarios:

Scenario | Possible Core-allocations: Assumptions: Numa-0 (0-21) Numa-1 (22-43) vSwitch Core # : 02 | DUT Ports TGen Ports (Hardware) | |

1 | PMDs: 4, 5, 6, 7 (0xF0) | VNF: 8,9 | DUT: eno5, eno6 TGEN: 5, 6 |

2 | PMDs: 4, 5, 6, 7 (0xF0) | VNF: 22, 23 | DUT: eno5, eno6 TGEN: 5, 6 |

3 | PMDs: 4, 5, 6, 7 (0xF0) | VNF: 8, 22 | DUT: eno5, eno6 TGEN: 5, 6 |

4 | PMDs: 4,5,22,23 (0xC00030) | VNF: 8,9 | DUT: eno5, ens801f2 TGEN: 5, 7 |

5 | PMDs: 4,5, 22, 23 (0xC00030) | VNF: 24, 25 | DUT: eno5, ens801f2 TGEN: 5, 7 |

6 | PMDs: 4, 5, 22, 23 (0xC00030) | VNF: 8, 24 | DUT: eno5, ens801f2 TGEN: 5, 7 |

7 | PMDs: 22, 23, 24, 25 (0x3C00000) | VNF: 26, 27 | DUT: ens801f2, ens802f3 TGEN: 7, 8 |

8 | PMDs: 22, 23, 24, 24 (0x3C00000) | VNF: 4,5 | DUT: ens801f2, ens802f3 TGEN: 7, 8 |

9 | PMDs: 22, 23, 24, 25 (0x3C00000) | VNFs: 4,26 | DUT: ens801f2, ens802f3 TGEN: 7, 8 |

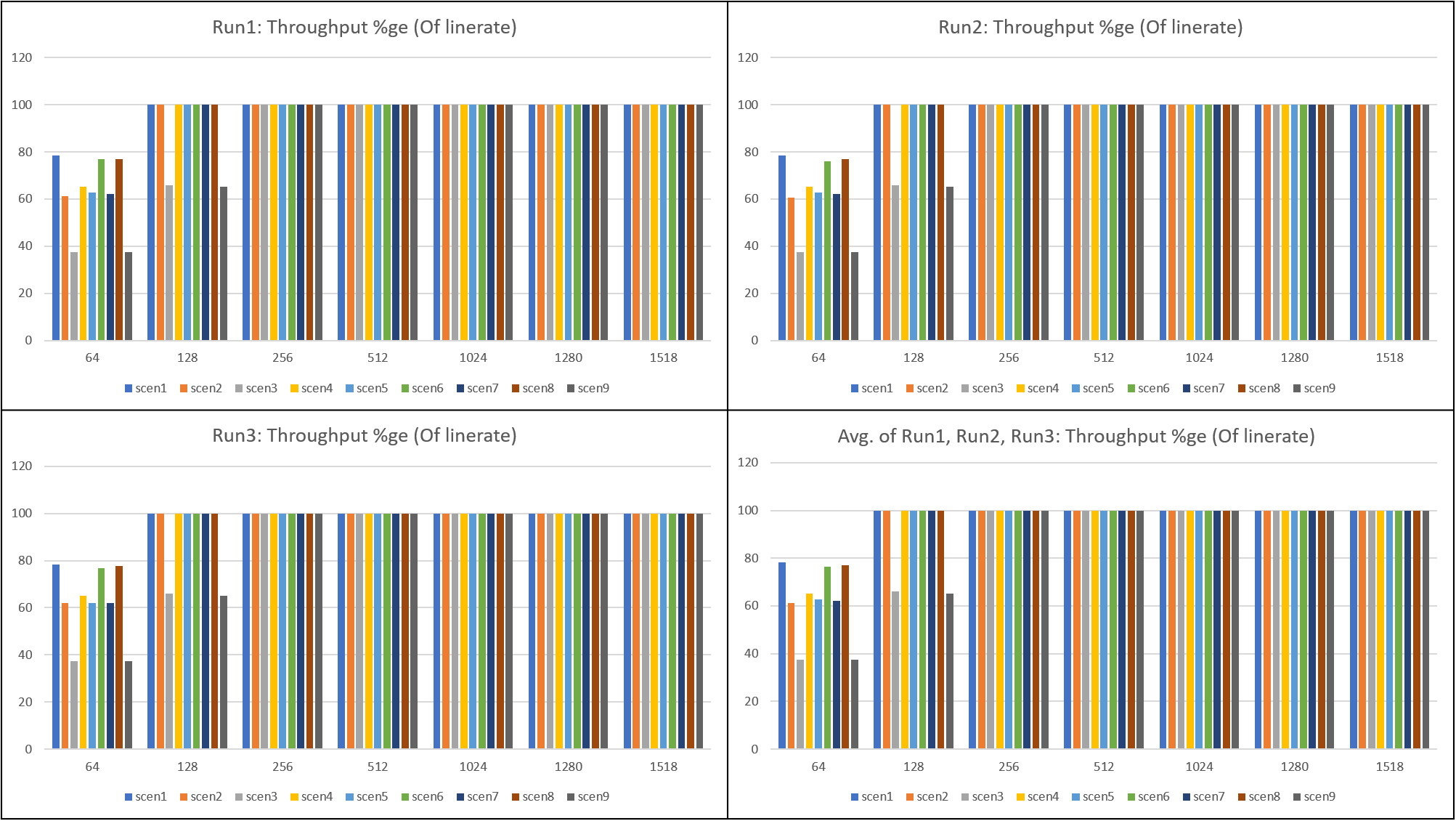

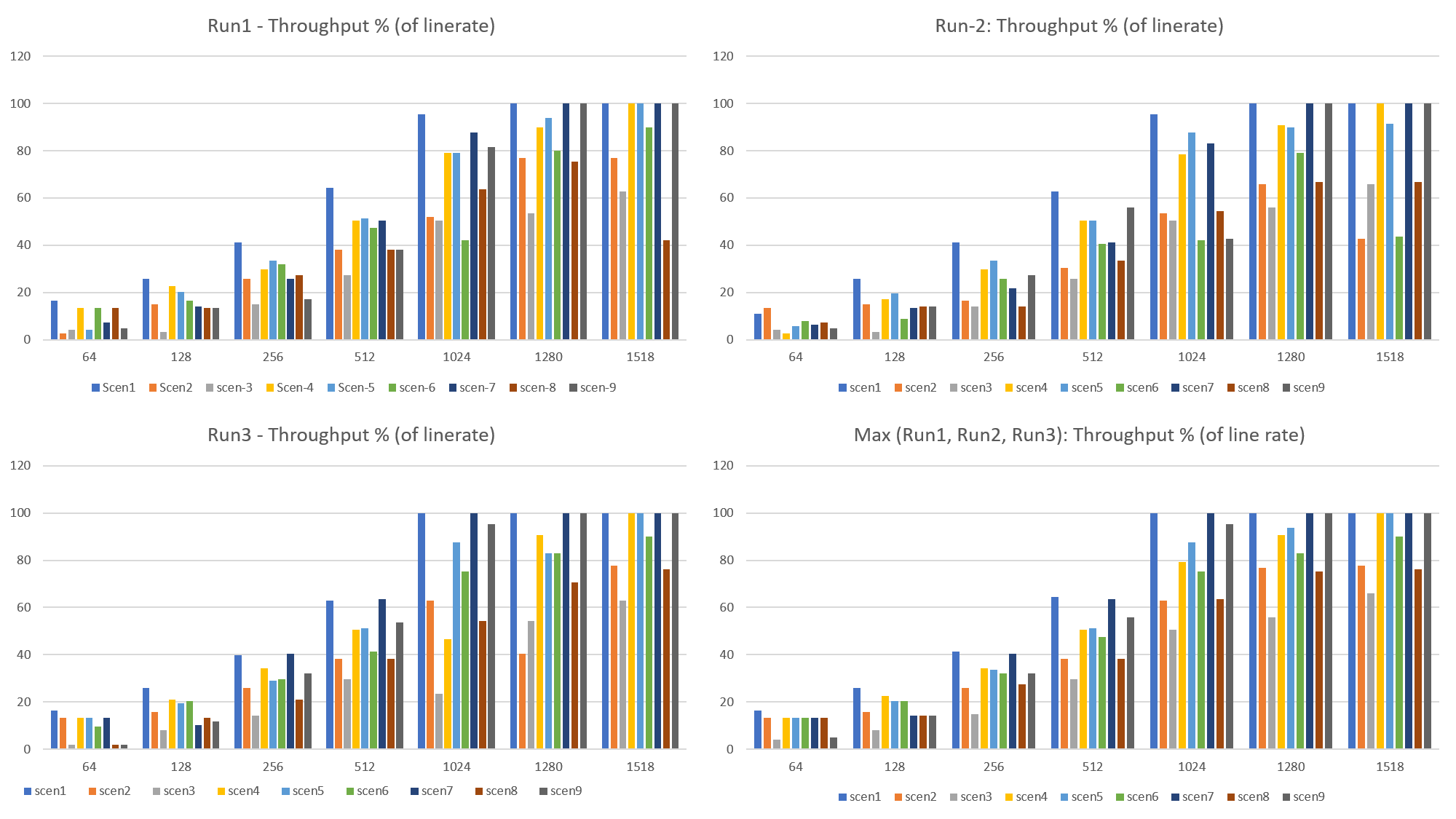

Results: P2P

RFC2544 Throughput Test Results

Continuous Throughput Test Results (Max Received Frame Rate at 100% of Line rate offered load)

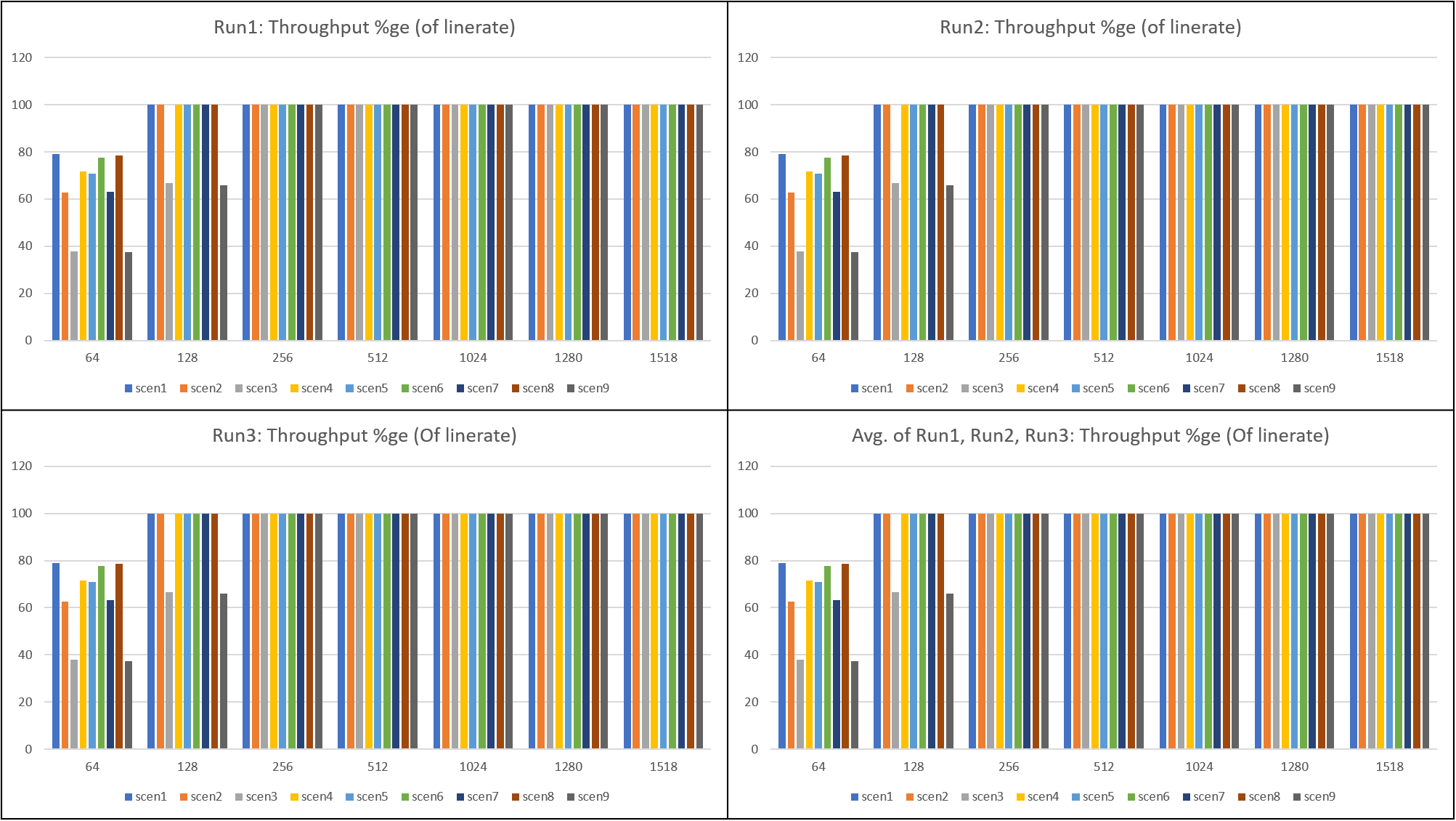

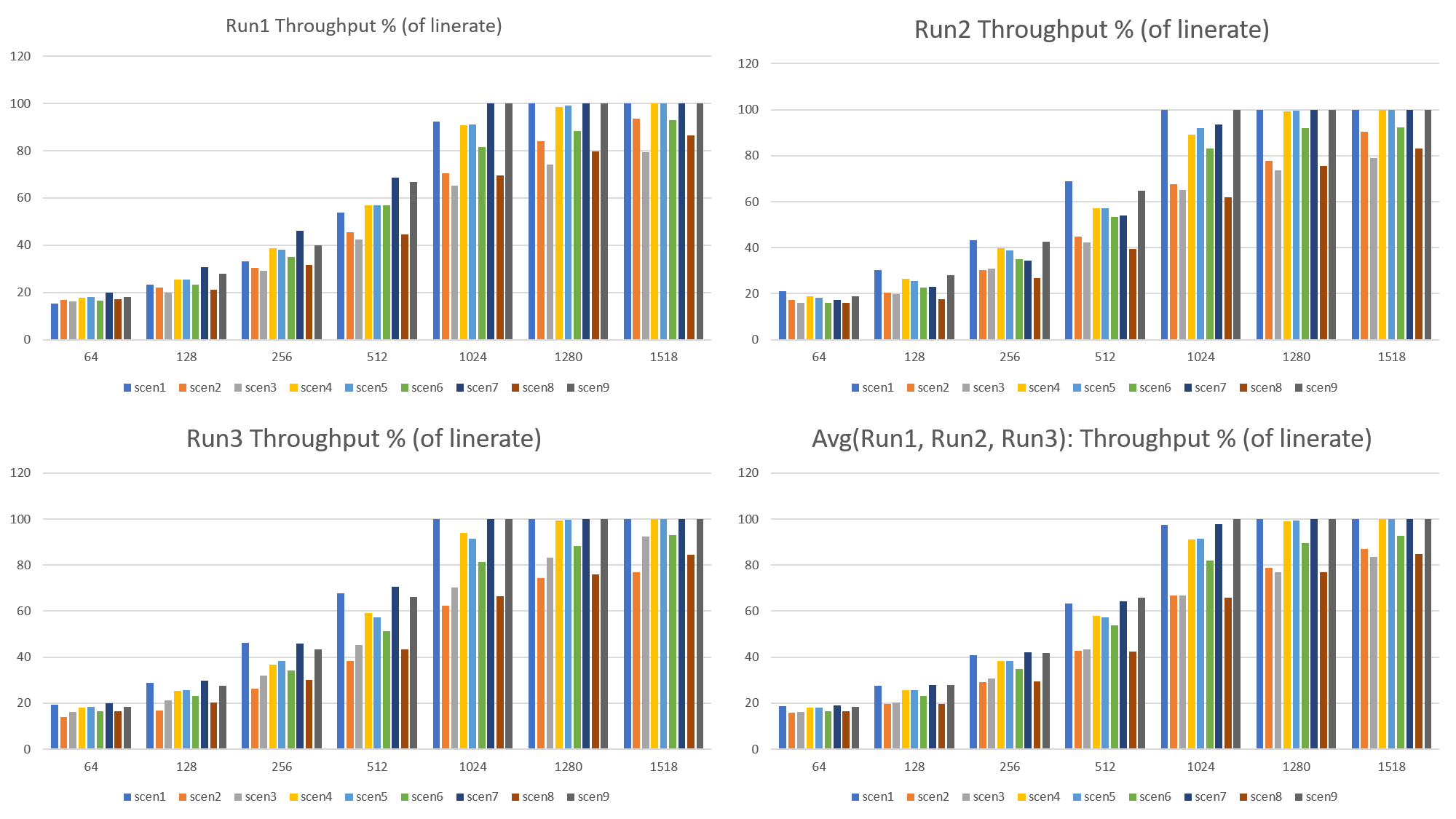

Results: PVP

RFC2544 Throughput Test Results

Continuous Throughput Test Results (Max Received Frame Rate at 100% of Line rate offered load)

Inferences

Theme: What is expected, What is unexpected,

P2P:

- Only the smaller (64 and 128) packet sizes matter. For packets sizes above 128 the throughput performance remains similar.

- Scenarios 2 and 7 can be seen as the worst case scenarios with both the PMDs running on different NUMA than the NIC. As expected, the performance is consistently low for both scenarios-2 and 7.

- Interesting cases are Scenario-3 and Scenario-9. Here a single pmd ends up serving both the NICs. This results in poorer performance than Scenario-2 and 7.

- Scenario 1, 6, and 8 can be seen as good cases where each of the NICs are served by single, separate PMDs.

PVP:

In these scenarios, we ensure there is always at least 1 PMD mapped to a NUMA to which a physical NIC is mapped to.

Generic:

Possible Variations

- Increase the Number of CPUs to 4 for the VNF.

- Phy2phy case (no VNF).

- Try different forwarding VNF

- Different Virtual Switch (VPP)

- RxQ Affinity.

Notes on Documentation

- must view log files, qemu threads need to match the intended scenario for VM -

- Christian created qemu command (and documentation) - check this for VM mapping

- SR: CT's command is only the host

- qemu command line -smp 2 should do this - simulates two Numa Nodes - need to see how the VM see it's architecture: numactl -h