...

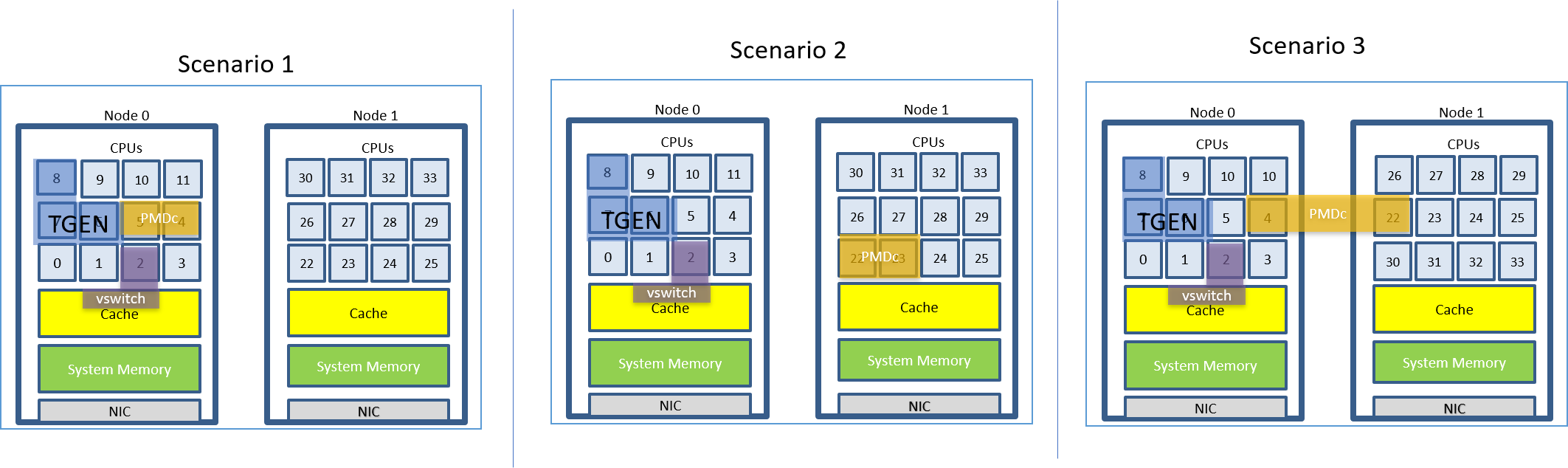

V2V Scenarios

Summary of V2V Scenarios

| Scenarios | Possible Core-allocations: | TGen Ports Info |

|---|---|---|

| 1 | PMDs: 4, 5 (0x30) | 2 Virtual Ports 10G |

| 2 | PMDs: 22, 23 (0xC00000) | 2 Virtual Ports 10G |

| 3 | PMDs: 4, 22 (0x400010) | 2 Virtual Ports 10G |

P2P Scenarios

Summary of P2P Scenarios:

...

V2V Scenarios OVS_PMD and interfaces (virtual) mappings

| Scenarios | Mappings |

|---|---|

| Virtual Interfaces | Bridge trex_br |

| Scenario-1 | pmd thread numa_id 0 core_id 4: |

| Scenario-2 | pmd thread numa_id 1 core_id 22: |

| Scenario-3 | pmd thread numa_id 0 core_id 4: |

PVP Scenarios OVS-PMD and Interfaces (physical and virtual) mappings

| Scenario | Mappings |

|---|---|

| 1/2/3 | pmd thread numa_id 0 core_id 4: |

| 4 | pmd thread numa_id 0 core_id 4: pmd thread numa_id 1 core_id 23: |

| 5 | pmd thread numa_id 0 core_id 4: pmd thread numa_id 1 core_id 22: |

| 6 | pmd thread numa_id 0 core_id 4: pmd thread numa_id 1 core_id 23: |

| 7/8/9 | pmd thread numa_id 1 core_id 22: |

P2P Scenarios OVS-PMDs and Physical-Interface Mappings

| Scenario | Mappings |

|---|---|

| 1 | pmd thread numa_id 0 core_id 4: |

| 2 | pmd thread numa_id 1 core_id 22: |

| 3 | pmd thread numa_id 0 core_id 4: |

| 4 | pmd thread numa_id 0 core_id 4: |

| 5 | pmd thread numa_id 1 core_id 22: |

| 6 | pmd thread numa_id 0 core_id 4: |

| 7 | pmd thread numa_id 0 core_id 4: |

| 8 | pmd thread numa_id 1 core_id 22: |

| 9 | pmd thread numa_id 0 core_id 4: pmd thread numa_id 1 core_id 22: |

Possible Variations

- Increase the Number of CPUs to 4 for the VNF.

- Phy2phy case (no VNF).

- Try different forwarding VNF

- Different Virtual Switch (VPP)

- RxQ Affinity.

Summary of Key Results and Points of Learning

- Performance degradation due to Cross-NUMA Node instantiation of NIC, vSwitch, and VNF can vary from 50-60% for lower size packets (64, 128, 256) to under 0-20% for higher packet sizes ( > 256 bytes)

- The worst performance was observed with PVP setup and scenarios where all PMD cores and NICs are in same NUMA Node, but VNF cores are shared across NUMA Nodes. Hence, VNF cores are best allocated within the same NUMA Node. If the VIM prevents VNF instantiation across multiple NUMA Nodes then, this issue is effectively avoided.

- Any variations in CPU assignments under P2P setups has no effect on performance for packet sizes above 128 bytes. However, V2V setups show performance differences for larger packet sizes of 512 and 1024 bytes.

- Continuous traffic-tests and RFC2544 Throughput using Binary Search with Loss-Verification provides more consistent results across multiple-runs. Hence, these methods should be preferred over legacy RFC2544 methods using Binary search or Linear search algorithms.

- A single NUMA Node serving multiple interfaces is worse than Cross-NUMA Node performance degradation. Hence, it is better to avoid such configurations. For example, if both the physical NICs are assigned to NUMA-Node id 0 (with core ids 0-21), then the configuration-a below will lead to poorer performance than configuration-b

Configuration-a

pmd thread numa_id 0 core_id 4:

isolated : false

port: dpdk0 queue-id: 0

port: dpdk1 queue-id: 0

pmd thread numa_id 1 core_id 22:

isolated : false

Configuration-b

pmd thread numa_id 1 core_id 22:

isolated : false

port: dpdk0 queue-id: 0

pmd thread numa_id 1 core_id 23:

isolated : false

port: dpdk1 queue-id: 0

6. The average latencies have exactly opposite patterns under PVP setups and scenarios for continuous traffic testing and RFC2544 throughput test (with search algorithm BSwLV). That is, average latency is lower for lower packet sizes for RFC2544 throughput test and higher for higher packet-sizes, and this trend is opposite for continuous traffic testing.

Note: For result 6, could this be the result of the continuous traffic testing filling all queues for the duration of the trial? The RFC 2544 Throughput methods (and those of the present document) allow the queues to empty and the DUT to stabilize between trials.

Notes on Documentation

- must view log files, qemu threads need to match the intended scenario for VM -

- Christian created qemu command (and documentation) - check this for VM mapping

- SR: CT's command is only the host

- qemu command line -smp 2 should do this - simulates two Numa Nodes - need to see how the VM see it's architecture: numactl -h